MLPerf

Machine Learning Benchmarks That Make ML Evaluation Faster & Smarter

The Challenge

Machine learning (ML) has emerged as one of the most popular technologies in today’s digital landscape. However, with a growing number of ML-powered tools on the market, companies face the challenge of knowing which solutions truly deliver accurate, reliable results.

Evaluating these tools is both a time-consuming and complex task, requiring a combination of metrics to accurately assess a system and ensure it interprets human language across different contexts.

The Vision

The founders of MLPerf recognized the growing demand for a tool that simplifies machine learning benchmarking. Their goal was to create a tool capable of comparing machine learning workloads across a range of devices, including laptops, desktops, and workstations.

Their team quickly recognized that developing an MLPerf Benchmark tool that guided smarter AI decisions required a comprehensive strategy and a partner who knew how to leverage advanced technologies.

The Scopic Solution

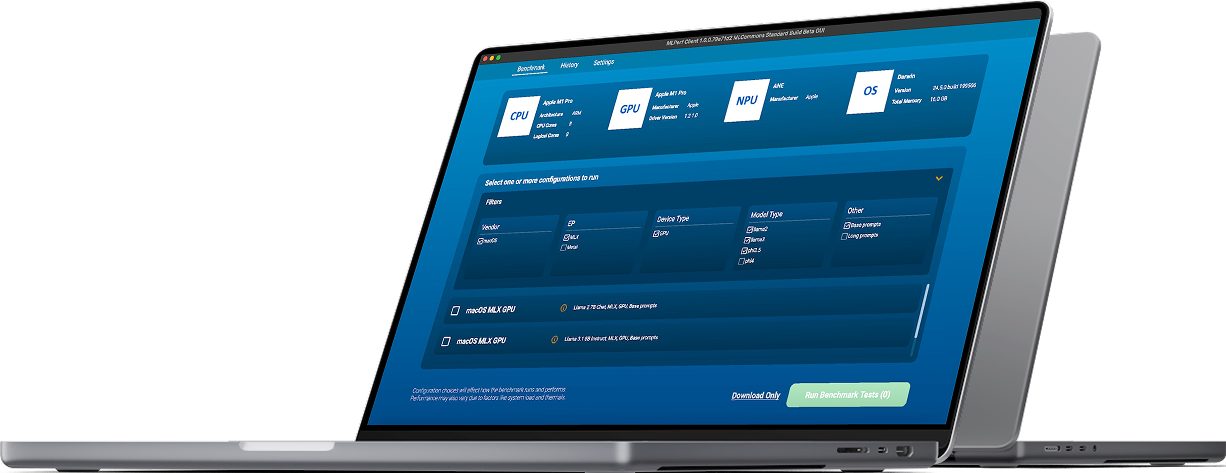

ML Commons partnered with us to develop MLPerf, a leading benchmarking tool designed to measure the performance of machine learning systems on a wide range of hardware.

Our team leveraged advanced technologies—such as Llama tokenizer, OrtGenAI, and NativeOpenVINO—to create a tool capable of:

- Evaluating the performance on diverse ML tasks, including image recognition, natural language processing, and recommendation systems.

- Comparing results across multiple hardware and software configurations.

- Optimizing system performance for specific workloads using insightful metrics.

As part of the second phase of our work, we developed and optimized the solution’s UI for both desktop and iPad platforms, incorporating valuable input from ML Commons and Independent Hardware Vendors (IHVs) such as AMD, NVIDIA, Intel, Microsoft, and Qualcomm.

Our developers also implemented automated testing and stepped in to manage time-sensitive responsibilities typically handled by IHVs—delivering everything needed to help MLPerf become a trusted name in the AI and machine learning community.

Cross-platform benchmarking

Diverse ML task evaluation